Autonomous Navigation in Robots

Have you ever wondered how robots navigate an unknown environment and get themselves from point A to B? You might be surprised to know that the concepts involved in this intriguing process might be familiar to you.

Lets take a dive into the basics of how robots perceive and navigate their worlds. Generally speaking, a few different components are required:

- A source of depth data, such as a LIDAR or depth camera.

- Some form of odometry, like wheel encoders or an IMU.

- A computer to crunch numbers and take decisions.

Autonomous navigation in robotics has always been a subject of study, from early research and the first commercial robots capable of this in the 1980's to the famous robotic vacuum cleaner robot Roomba to cutting edge tech developed by the military.

|

| The Roomba robotic vacuum cleaner |

|

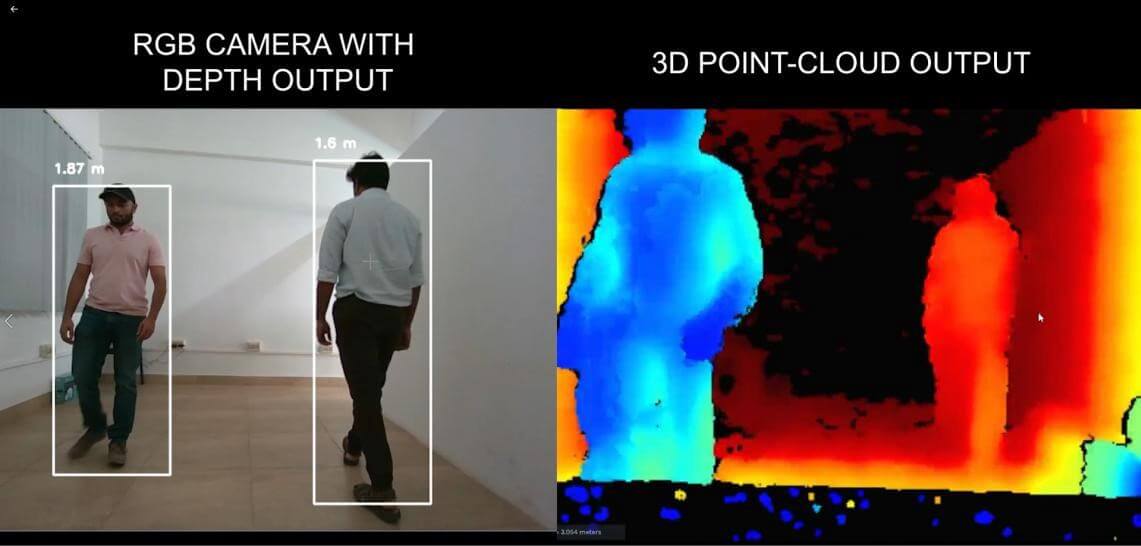

| The RGB and depth outputs of a depth camera |

The output from these sensors are used to build a "map" of the space the robot is navigating. The map tells the robot where it can and can't go. The map can then be modified in order to take account for factors such as the robots own dimensions in order to build a costmap.

|

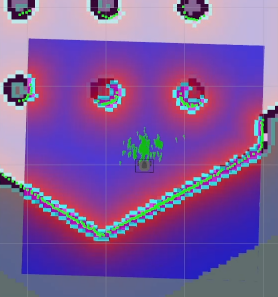

| Costmap of an environment |

The costmap shows which parts of the map can and cannot be visited. Armed with this knowledge, the computer can now calculate a path using a path planning algorithm such as A* in order to create a path that both takes into account path length and obstacles.

The role of the odometry source is in order to track the robots movement, specifically its angular and linear velocities and its current rotation and location. This, in association with the depth information can be used to calculate the robots movement and position with respect to its environment.

In actual applications, software for implementing all this behavior is usually already written and only needs modifications in order to work with a particular robot or environment, an example might be the ROS navigation stack.

This article was authored by Zahran Sajid, the research head at ACM VITCC.

References:

- http://wiki.ros.org/

- https://en.wikipedia.org/wiki/Autonomous_robot#Autonomous_navigation

Comments

Post a Comment